3 Ways to Use Screaming Frog SEO Spider to Analyze Your Site

Each week a member of our team leads a professional development training session to help grow skills across our team. Over the next few months expect to see one post per week that provides key takeaways, tips and information learned from each session.

This week’s post will show you three useful tips learned from a Screaming Frog Training session led by John Fairley. Screaming Frog SEO Spider is a great tool for analyzing your website to find internal and external linking, response codes for pages on your site, possible SEO issues and much more. Three nice features of Screaming Frog include summary stats, custom filters and setting your user agent.

Crawl Overview Report and Summary Stats

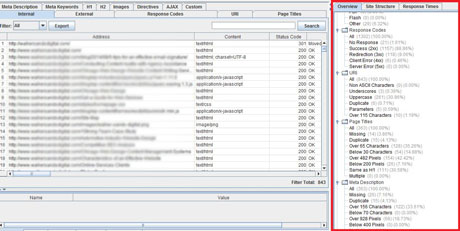

The summary stats feature allows you to see an overview of important information found during a crawl of your website. This feature is great for an overview of different elements on your site and gives you a quick glimpse at potential issues or improvements. For example, there is a section called “Meta Description” that shows you how many pages are missing a meta description, have duplicate descriptions, are too long and much more. This is great when you are looking to optimize your site and want to see how many of your pages are in need of optimization. In the image below you can see an example of what the summary stats section looks like in Screaming Frog.

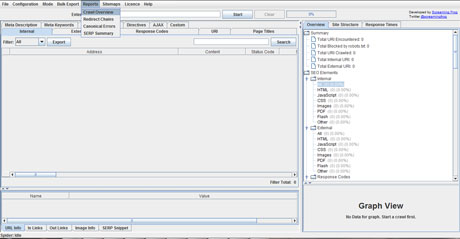

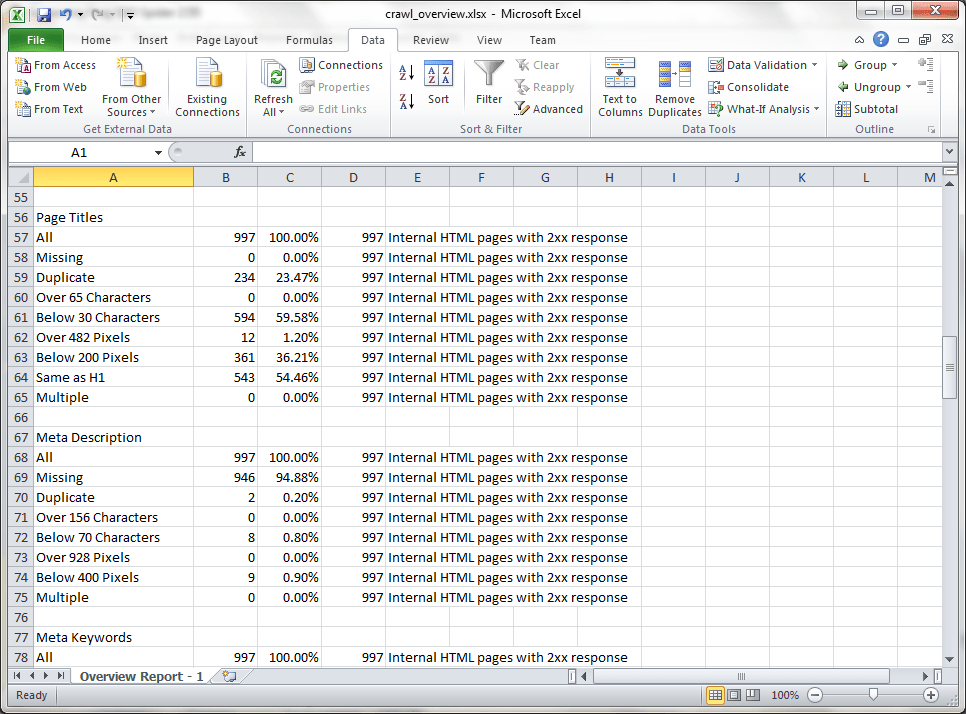

After looking at these summary stats you might want to export your findings into an excel document for future use or to show the report to another member on your team. To export the stats, go to the “Reports” drop down and select “Crawl Overview.”

After running the report, the summary stats will be exported into an excel document- perfect for saving or sharing.

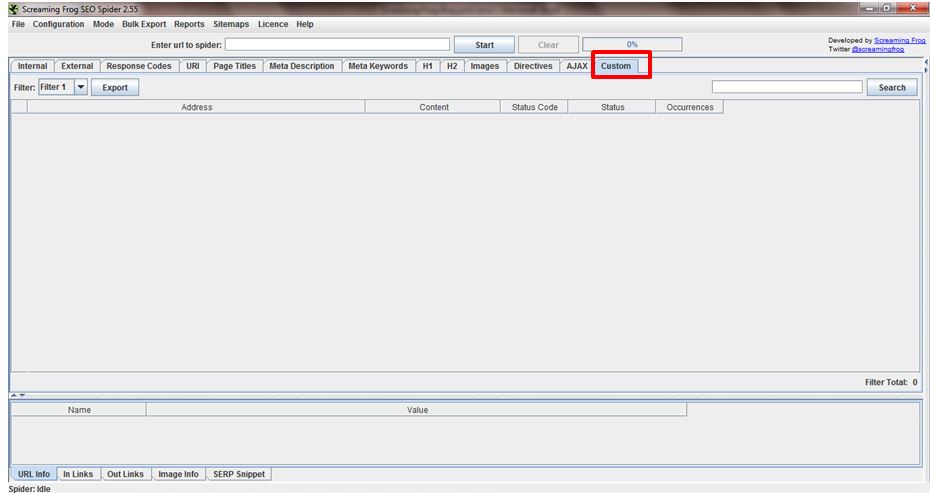

Custom Filters

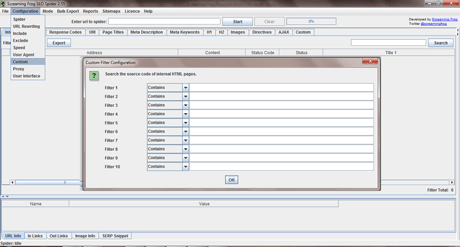

A really nice feature in Screaming Frog for finding more specific elements on your site is the custom filters tab. Custom filters allow you to place in criteria to find specific items on pages of your site. Screaming Frog reads the HTML of the different pages on your site so whatever is in the HTML can be found in screaming frog. For example, when we moved offices two weeks ago I used screaming frog to find all the pages on the website that contained our old address. I set the custom filter so that it would find all the pages that contained the word “Jefferson” or “121” in the source of the page. That way I could find all the places on our website that contained our old address and change it to the new address.

To set the custom filter, before crawling the site, go to “Configuration” >> “Custom” and type in the filter you want. Then, crawl the site and all the pages that contain your filter will appear in the custom tab on screaming frog.

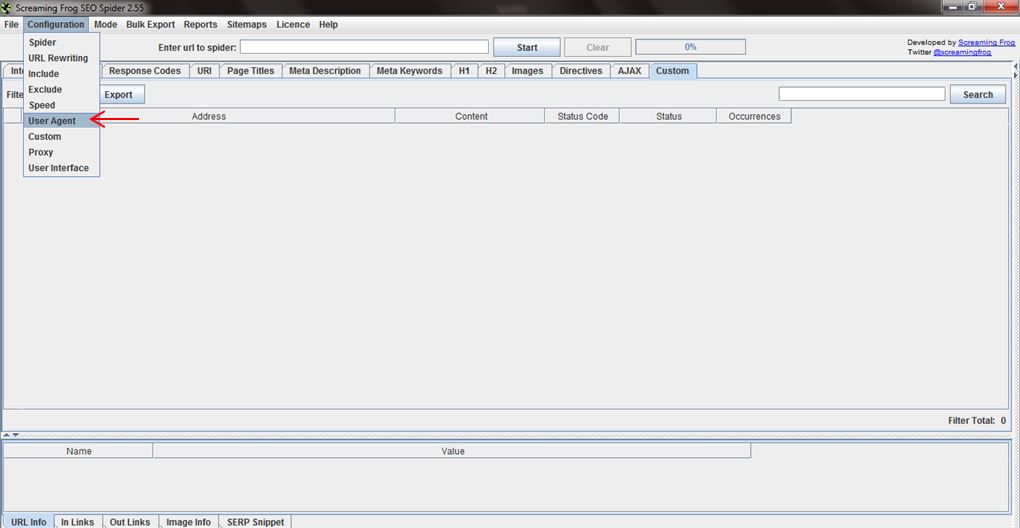

Setting Your User Agent- Crawl like Google

Sometimes when you are analyzing and working on your website you might want to see what Google finds when it crawls your site. Crawling your site as a search engine can show you differences between what search engines and normal visitors see. For example, a difference could be the amount of links seen by a search engine vs a user.

In Screaming Frog, there is a function that allows you to “Set Your User Agent” this function lets you crawl as a user, GoogleBot, Yahoo and more. To set your user agent as Google follow the steps below:

Under the Configuration menu item, select “User Agent.”

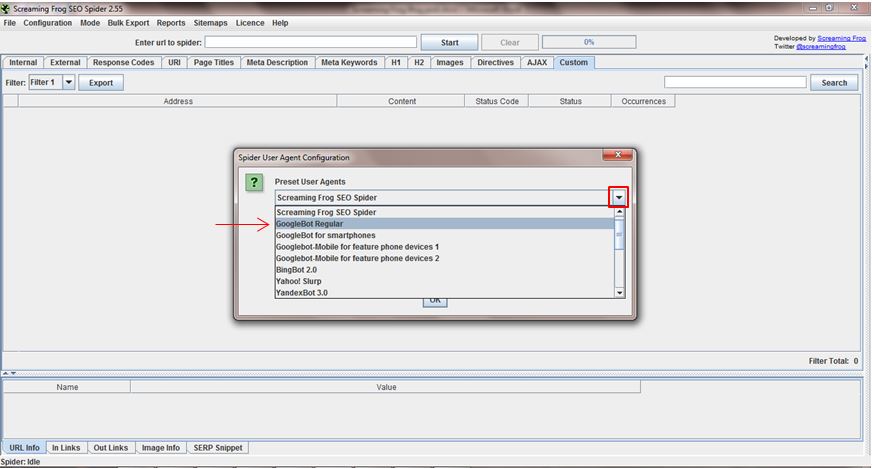

After clicking “User Agent,” a pop up box will appear. Under “Preset User Agents” select the dropdown arrow and select “GoogleBot Regular.”

Run the crawl and the GoogleBot crawl will be in the results.

If you have any questions about the above items or want to learn more about Screaming Frog, fill out the form.